A short field guide for SME leaders who are looking for ROI, not “pilot purgatory”

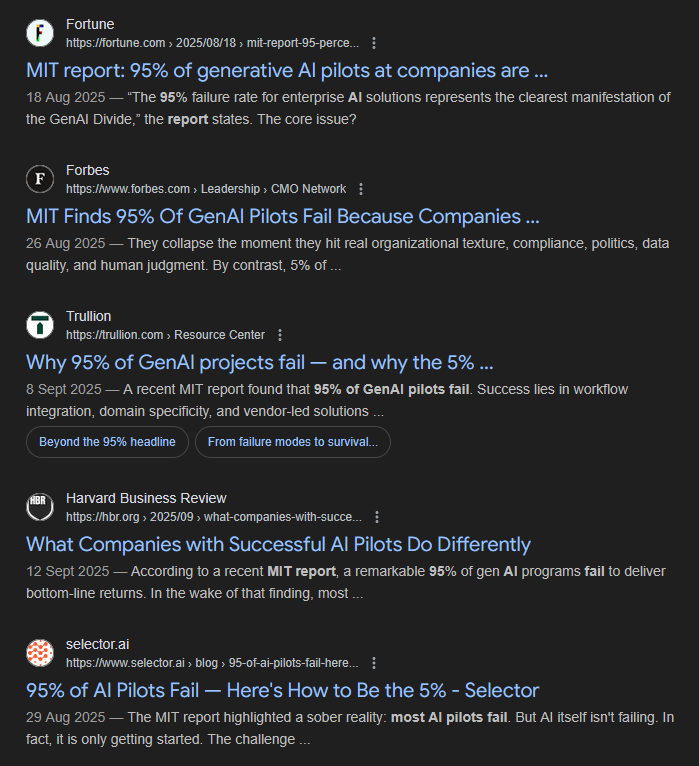

You might have seen the viral headlines claiming “95% of AI pilots fail” attributed to MIT.

Don’t panic.

As Wharton Professor Kevin Werbach pointed out in a detailed critique, that statistic collapses under scrutiny.

The report defined “failure” as anything without immediate P&L impact – which, as he noted “does not mean zero returns.”

Furthermore, there appears to be little to no support for the 95% number claimed.

But while the report claims are suspect, the danger of AI pilots failing is real.

The most common failure mode of AI pilots isn’t a crash – it’s simply stagnation: endless experiments with no production, lots of meetings, and everyone gradually loses interest. The project dies slowly.

As one engineer put it: “The proof-of-concept looked amazing in a demo, but it broke on our real data.”

That is pilot purgatory. I’ve watched good teams get stuck there for months.

If you lead an engineering or professional services SME, you’ve probably had the same week as everyone else. One person is quietly using ChatGPT on their phone to rewrite a client email. Another is asking IT to “build us an AI assistant” because they saw a demo on LinkedIn. Someone in the boardroom wants a plan by next Friday.

If you’re the one signing off on AI spend: read on to learn how to ask better questions and avoid expensive mistakes.

If you’re the one running the pilot: this is your implementation playbook.

Either way, I’m not here to sell you the future, but I do want to help you avoid wasting the next 6 months.

Distinction: AI is probabilistic, your business is not

- Traditional software is deterministic: A + B = output C

- Generative AI is probabilistic: A + B = probably output C

(This is the difference between traditional rules-based automation and AI-driven automation)

This distinction changes we manage quality, safety, and accountability.

- If you expect calculator behaviour, you will be surprised.

- If you design workflows that assume mistakes will happen, you’ll get value without sleepless nights.

It’s best to treat AI like a creative intern. Brilliant at drafting. Occasionally unreliable. You wouldn’t let an intern sign off a technical report. Same principle.

A simple rule holds up in the real world: AI drafts. Humans decide.

AI can get you from 0% to 80% fast. The final 20% is where liability lives.

The hidden cost that kills ROI: the verification tax

Most AI pilots look good until you measure the human-in-the-loop time.

If AI saves 30 minutes drafting a report, but your engineer spends 45 minutes verifying and correcting it, you’ve built a time-wasting machine with a shiny interface. I’ve seen this scenario play out, and the engineer ends up more frustrated than before – now they’re doing the same work plus babysitting a tool that was supposed to help. And they’re being told by leadership that they “must use AI or else”.

Let’s use a blunt calculation: net value = time saved drafting – time spent verifying

This stops “AI wow” from turning into “AI busywork”.

If the verification time stays high after a few weeks, you haven’t found a good use case or your data is a mess. Either way, the pilot needs to change direction.

Step 1: What’s an AI Pilot?

Many pilots fail because they start with “Let’s try AI.”

That phrase produces scope creep, random use cases, and budget arguments, so make a clean decision upfront.

What’s an AI pilot? There are three common tiers of AI pilot.

Tier 1: The Toolkit Pilot (OpEx)

Buying commercial licences for a defined group – Microsoft Copilot, ChatGPT Team, Gemini, Claude, etc.

Success metric: adoption and time saved on routine writing.

Risk: Low, if you control data privacy.

Common pilots: Meeting transcription and action extraction, rewriting client emails for tone, summarising tender documents before bid/no-bid decisions, first-draft responses to RFIs.

Tier 2: The Retrofit Pilot (Configuration)

Switching on AI features inside tools you already use – CRM, finance systems, document platforms.

Success metric: throughput (invoices processed per hour, tenders reviewed per week).

This tends to be the sweet spot. Vendor partnerships succeed at double the rate of internal builds (67% vs 33%). Why? Because the vendor already solved the hard parts: permissions, UI, and security.

Common pilots:

- Invoice data extraction into your ERP

- AI-assisted email triage in a support or enquiries inbox

- CRM features that summarise account history before calls

- Document comparison and redlining in your existing platform

Tier 3: The R&D Pilot (CapEx)

Building a custom system on private data.

Success metric: measurable value tied to a problem worth serious money.

Common pilots: RAG system over your internal wiki, custom chatbot trained on your technical standards, automated compliance checking, bespoke proposal generator from past winning bids.

Rule of thumb: only approve Tier 3 when the problem is unique to your IP and worth more than €50k to solve. Otherwise, you’re funding a science experiment. I’ve seen or heard of far too many teams building AI powered chatbots that nobody asked for because “executives wanted an AI strategy” but never defined the actual business problem.

Step 2: Pick a boring target on purpose

Most teams aim AI at the wrong place first. They go for public chatbots or client-facing automation, and that’s where reputational and liability risk lives.

Start where errors are easier to catch and impact is easy to measure.

Good starting points:

High volume, text-heavy, repetitive, internal-facing work.

- Summarising tender documents

- Categorising invoices

- Drafting routine client responses

- Summarising daily site logs and snag lists

Avoid:

Customer-facing chatbots, safety-critical automation, calculation-heavy tasks, anything hard to verify quickly.

This is important because early wins create trust. Early public failures create fear and policy overreach – and suddenly you’re explaining to the board why the AI promised a client something it shouldn’t have.

Step 3: Fix the unsexy stuff first

For leadership: This is where most pilots quietly fail. Bad data in means confident nonsense out – and your team won’t always tell you.

AI doesn’t make bad data better. It makes bad data louder. If you point AI at 10 years of folders called “Final_FINAL_v7” involving mixed-up regulations, it will confidently cite the wrong standard. One IT team described their internal knowledge-base pilot this way: “It lies confidently.” That’s what happens when nobody owns content freshness.

The Fix: Create a curated “clean room” dataset for the pilot. Only current, approved, machine-readable documents go in. AI can only look there.

The Shadow AI problem

Fixing internal data is only half the battle – you also have to manage where your data is leaking out.

Staff are almost certainly definitely using personal AI tools for work already. If your team is big enough, it’s guaranteed.

The choice is uncontrolled use with confidential client or proprietary internal data being pasted into consumer models, or creating a sanctioned sandbox with clear rules.

Provide an enterprise-grade option – preferably hosted in the EU to satisfy data residency – and a straightforward policy: use the sanctioned tool for work, do not paste client-sensitive data into free or personal tools.

Step 4: Run the pilot like a construction project

A common rollout pattern is “buy licences for everyone and see what happens” looks decisive, but it often becomes expensive noise. Many people try the tool once or twice, don’t figure out how to make it match their workflow, and quietly stop. Then you’re paying for licences nobody uses.

Instead, pick a vanguard team of 5-8 AI champions: maybe a lead engineer, two junior engineers who feel the admin pain, one ops person, and I find it’s useful to include at least one sceptic. The job of the AI champions is to learn, experiment, figure out where the value is, share their wins, and write the SOPs the rest of the business can follow.

Define the handover point clearly. AI generates the draft, and a named human reviews and approves. That person carries the liability, not the tool. Your work product is your work product.

Write out the workflow step-by-step. Keep it boring. Boring scales.

Step 5: Measure what matters (Calibration)

Don’t judge performance on vibes – you wouldn’t trust a pressure gauge without calibrating it first.

Before going live, run 20 past examples through the tool. Compare the AI output to the known “truth” (what your engineers actually did). If it fails this calibration, do not deploy.

Once deployed, track leading indicators:

- Throughput: Did the team process more work without overtime?

- Error capture: Did the tool help spot issues a tired human might miss?

- The “Boredom Index”: Did junior staff spend less time on formatting and filing? This matters because if your best people are still doing tedious admin, the AI is solving the wrong problem. And they’ll leave for somewhere that values their time.

Then return to the simplest question: Is verification time dropping?

If it stays high, kill the pilot. I know that feels like failure – nobody wants to walk into a boardroom and say “we’re stopping.” But a pilot is a feasibility study, and killing the wrong project is a success. It’s the pilots that limp on for months, consuming time and goodwill, that really hurt.

A director’s checkpoint you can use this week

Before you do anything else, answer these five questions:

- Tier selected: are we doing Toolkit, Retrofit, or R&D?

- Target defined: is the first use case internal, measurable, and low-risk?

- Data plan: do we have a clean dataset and machine-readable inputs?

- Safe environment: do staff have a sanctioned sandbox and clear red lines?

- Measurement: are we tracking throughput and verification time?

If any answer is fuzzy, pause and tighten it. That pause saves months.

Start small, start boring, start now

AI does useful work when you treat it like engineering: clear scope, clean inputs, controlled execution, proper inspection.

Start with one boring process in Tier 2. Pick a small team. Measure verification time for 30 days. Then decide whether to scale, refine, or stop.

The goal isn’t to “do AI”. The goal is to get work done better. Keep that distinction and you’ll escape pilot purgatory entirely.